…but can I replicate that on scale? (Spoiler: probably not – but it's been a fun experiment 😉)

Lately, I have been working a lot on the Magic Pages infrastructure. The old server got too small. I needed a new one. And with that, many issues popped up.

One side quest of all of that was the fact that I noticed a decrease in page load speed – even though I recently implemented BunnyCDN as content delivery network. In theory, that should make all requests faster.

Well, more on that here:

After I wrote that post last week, the whole topic of page speed turned into quite a rabbit hole. I learned a lot in just a few days.

How does DNS routing actually work? What happens in every step of a network request? What happens internally on my server? How can we reduce roundtrips between different instances?

Honestly, my head was spinning. So many levers to pull – but which one will be the right one?

Only one way to find out, right?

So, I tried. And tried. And tried. Many different things.

After a few days of trying, I couldn't believe my eyes. One of the tests I wrote showed that the improvements worked. Magic Pages was faster than any other Ghost CMS host. What??? 🤯

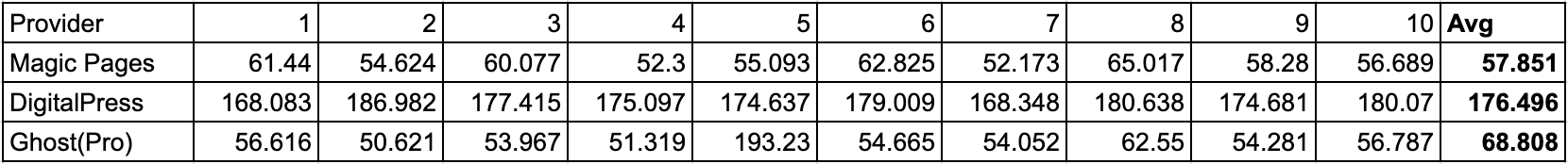

Well, here is a chart – have a look and I'll tell you more about it in a second.

The big issue I faced last week was a super long "time to first byte". So, the time my browser had to wait until it receives the first byte of data from the Magic Pages server.

In theory, the chain of events should be something like this:

- I send a request in my browser.

- My home network routes it to my ISP.

- That ISP looks up what IP address serves the URL I typed in.

- It then forwards my request to that IP.

- That IP is connected to a server, so the server goes looking for the stuff that I requested (that's Ghost).

- The server returns the information to my ISP.

- The ISP routes it to my home network.

- My browser receives everything.

Well, the long "time to first byte" was caused by something that looked more like this:

- I send a request in my browser.

- My home network routes it to my ISP.

- That ISP looks up what IP address serves the URL I typed in.

- It then forwards my request to that IP.

- That IP belongs to BunnyCDN.

- BunnyCDN already has some of the files cached, but not all of them.

- BunnyCDN sends a request to the server it was instructed to query.

- That server (that's Ghost, again) fulfils that request and sends it back.

- BunnyCDN returns the information to my ISP.

- The ISP routes it to my home network.

- My browser receives everything.

So, just a few steps more – but they caused a significant delay. Up to a second sometimes. I still have no idea why, but it was the reality of things 🤷

My goal was therefore to reduce that "time to first byte" as much as possible. And to check what good values for that are, I looked at my competitors. Specifically, Ghost(Pro) and DigitalPress. Both excellent hosting solutions – the point of this is not to bash them, but to show what's theoretically possible.

While I applied different improvements (well, they were experiments, really), I always ran a standardised test (no need to understand that – just putting it here for people who are interested).

I created completely fresh new trials on each of the services: Magic Pages, DigitalPress, and Ghost(Pro). I then made sure that the latest version of the Source theme was installed – since I believed that the "standard" theme would be the most optimised. And I deleted all content off Ghost. So, no posts or pages were there.

On my local laptop, I had this script (yes, pretty raw, but it does the job):

#!/bin/bash

declare -a domains=("domain1" "domain2" "domain3")

declare -a providers=("Magic Pages" "DigitalPress" "Ghost(Pro)")

function calculate_average {

total=0

for i in "${@:2}"; do

total=$(echo "$total + $i" | bc -l)

done

echo "scale=3; $total / $1" | bc

}

for index in "${!domains[@]}"; do

echo -n "| ${providers[$index]} "

results=()

for i in {1..10}; do

ttfb=$(curl -o /dev/null -s -w "%{time_starttransfer}" https://${domains[$index]})

ttfb_ms=$(echo "scale=3; $ttfb * 1000" | bc)

results+=($ttfb_ms)

printf "| %.3f " $ttfb_ms

done

avg=$(calculate_average 10 "${results[@]}")

echo "| $avg |"

doneIt's pretty simple: it takes three domains (one at each provider), and sends ten requests to each using cURL, a developer tool for making network requests. For these requests, it measures time_starttransfer – that's basically cURL's equivalent of TTFB (time to first byte).

It then puts the results into a neat table, which I can copy into Google Sheets.

And well, the first results were pretty bleak. It confirmed what I saw in the browser. A few hours later, I already had significant improvements (which are already rolled out on Magic Pages) by adjusting a few levers. At that point, Magic Pages was at around 90-100ms TTFB – DigitalPress and Ghost(Pro) were stable at the values you see above in the chart – around 170ms and 65-70ms respectively.

And then, after falling down the rabbit hole further and further, I completely forgot about the tool. I just had thoughts in my head and followed them. I was in my flow.

After a while, I checked the tool again, and got this result:

I couldn't believe it, so I repeated the test a few times. But the trend stayed like that. Magic Pages had a TTFB of around 55-60ms, DigitalPress 175-180ms, and Ghost(Pro) around 65-70ms.

What started as a test out of frustration accidentally turned into the fastest TTFB of any Ghost CMS hosting out there 🤯

But, take all of that with a grain of salt: results like these are highly subjective and depend on many different factors. Most importantly, your location and internet connection.

Apart from that, it will be very unlikely that I can replicate this in a production environment, since scaling services like this is always different than creating an experimental proof-of-concept.

Now, if you are hosting your own Ghost website, here are a few things I did to get to this super-low TTFB:

- I left the Ghost caching configuration as they are – otherwise, a new post would not show up in the frontend, for example.

- I set up BunnyCDN according to this tutorial I wrote, but adapted it further. I turned on Perma Cache (with an appropriate edge rule that excludes the /members and /ghost routes) with replication in all data centers.

- On that note, I figured out that a CDN only makes sense, if your visitors are geographically dispersed. I generally had faster a faster TTFB when the site was directly hooked up to the internet, given that I am only around 300km from the physical location of the servers. However – and that is pretty logical – the further you move away from the physical location, the longer the TTFB gets.

- I hosted my own test site on a fresh Kubernetes cluster in Hetzner's Nürnberg data center (and used this project for it). All my other services (database host, reverse proxy, etc.) are also located in the same data center, probably just a few meters apart from each other. This was, by far, the biggest speed boost (but also the most costly one, for such an experiment 😂)

- I sat in my living room, right next to my router. You might think this won't matter, but when I moved into my office – 3 walls and around 7 meters of distance – TTFB for all hosts dropped (yes, I could have used a cable).

So, fun experiment, hm? Now, cross your fingers and pray that I'll find a way to make this production-ready 😁